Abstract

Today, videos are the primary way in which information is shared over the Internet. Given the huge popularity of video sharing platforms, it is imperative to make videos engaging for the end-users. Content creators rely on their own experience to create engaging short videos starting from the raw content. Several approaches have been proposed in the past to assist creators in the summarization process. However, it is hard to quantify the effect of these edits on the end-user engagement. Moreover, the availability of video consumption data has opened the possibility to predict the effectiveness of a video before it is published. In this paper, we propose a novel framework to close the feedback loop between automatic video summarization and its data-driven evaluation. Our Closing-The-Loop framework is composed of two main steps that are repeated iteratively. Given an input video, we first generate a set of initial video summaries. Second, we predict the effectiveness of the generated variants based on a data-driven model trained on users' video consumption data. We employ a genetic algorithm to search the space of possible summaries (i.e., adding/removing shots to the video) in an efficient way, where only those variants with the highest predicted performance are allowed to survive and generate new variants in their place. Our results show that the proposed framework can improve the effectiveness of the generated summaries with minimal computation overhead compared to a baseline solution -- 28.3% more video summaries are in the highest effectiveness class than those in the baseline.

Evaluation

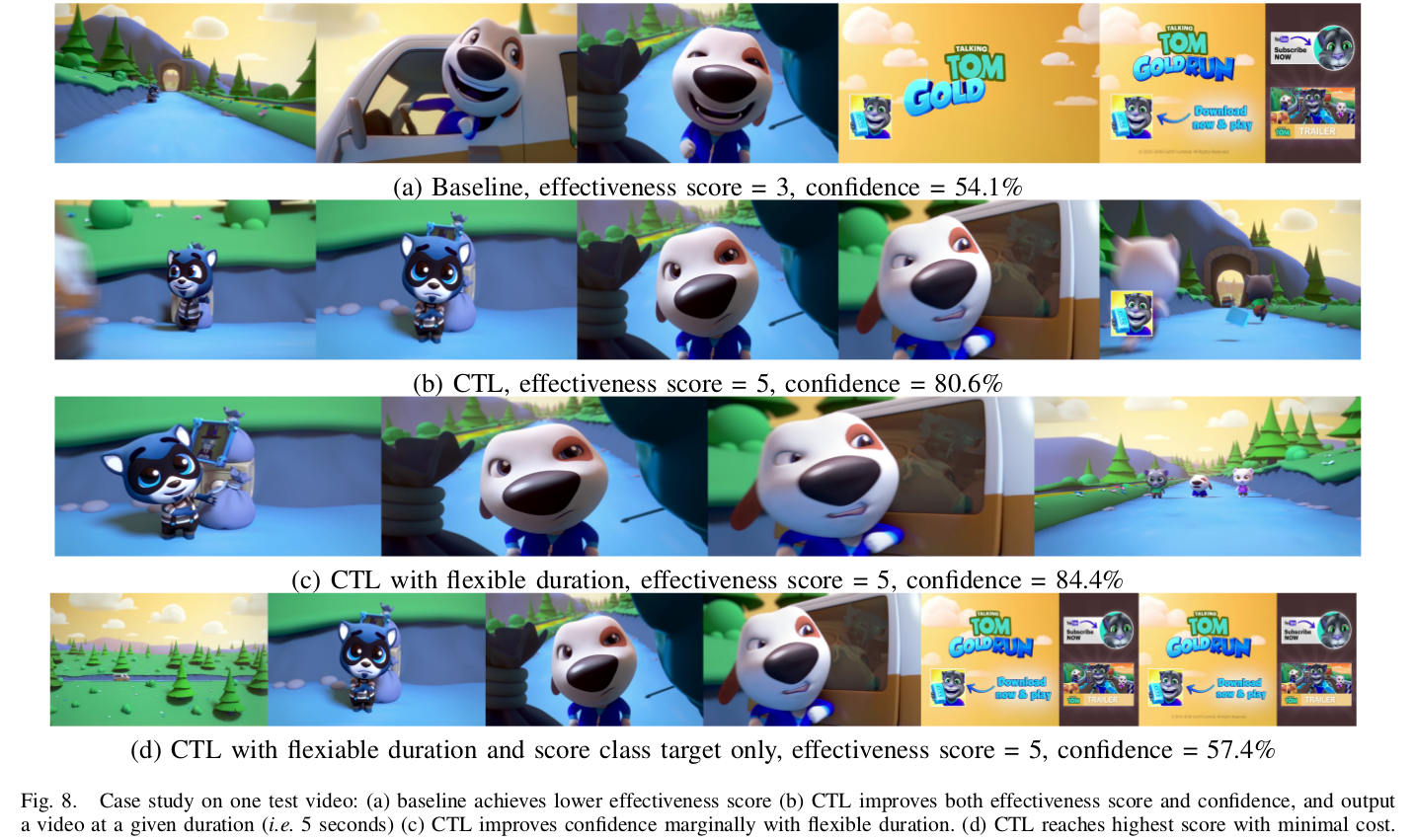

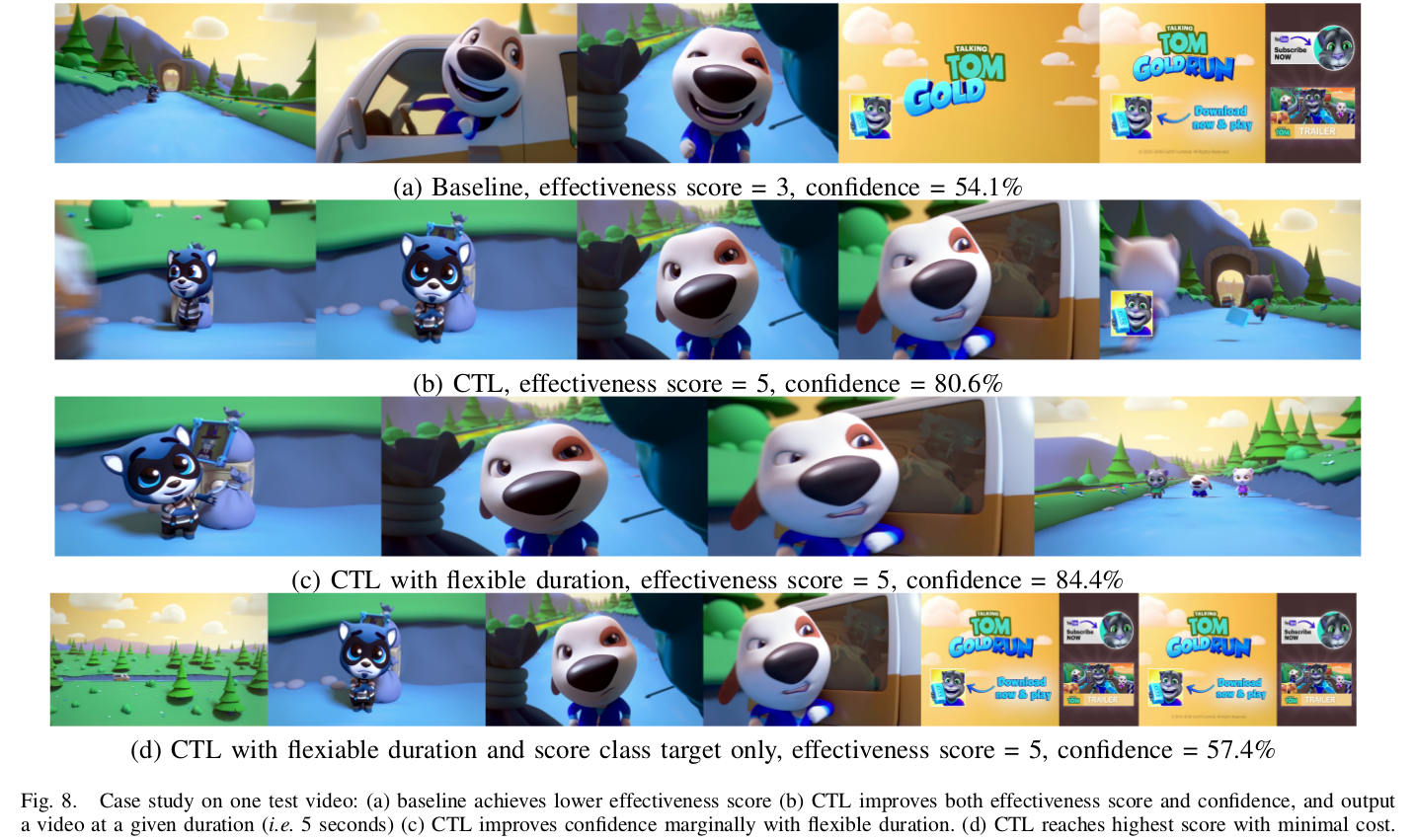

We provide the qualitative visualization of the output videos as case studies. Fig. 8(a) shows the 5 representative frames of the summary generated by the baseline algorithm -- the effectiveness score is equal to 3 with 54.1% confidence. Fig. 8(b) shows the output generated by the CTL framework -- the score class improves to 5 with 80.6% confidence. We further explore several cost-performance trade-offs, based on slightly changed summarization requirements. First, we relax the constraint on the final summary duration, meaning that the final summary can be of any length. Fig. 8(c) shows that the generated summary has a duration equal to 4 seconds. We are able to reach the same highest score class as in the fixed duration summary (Fig. 8 (b)) and even higher confidence -- 84.4%. We next showcase another low-cost trade-off by removing the constraint on generating a summary with high confidence. In this case, we are still able to generate an output summary in the highest effectiveness class (Fig. 8 (d)).

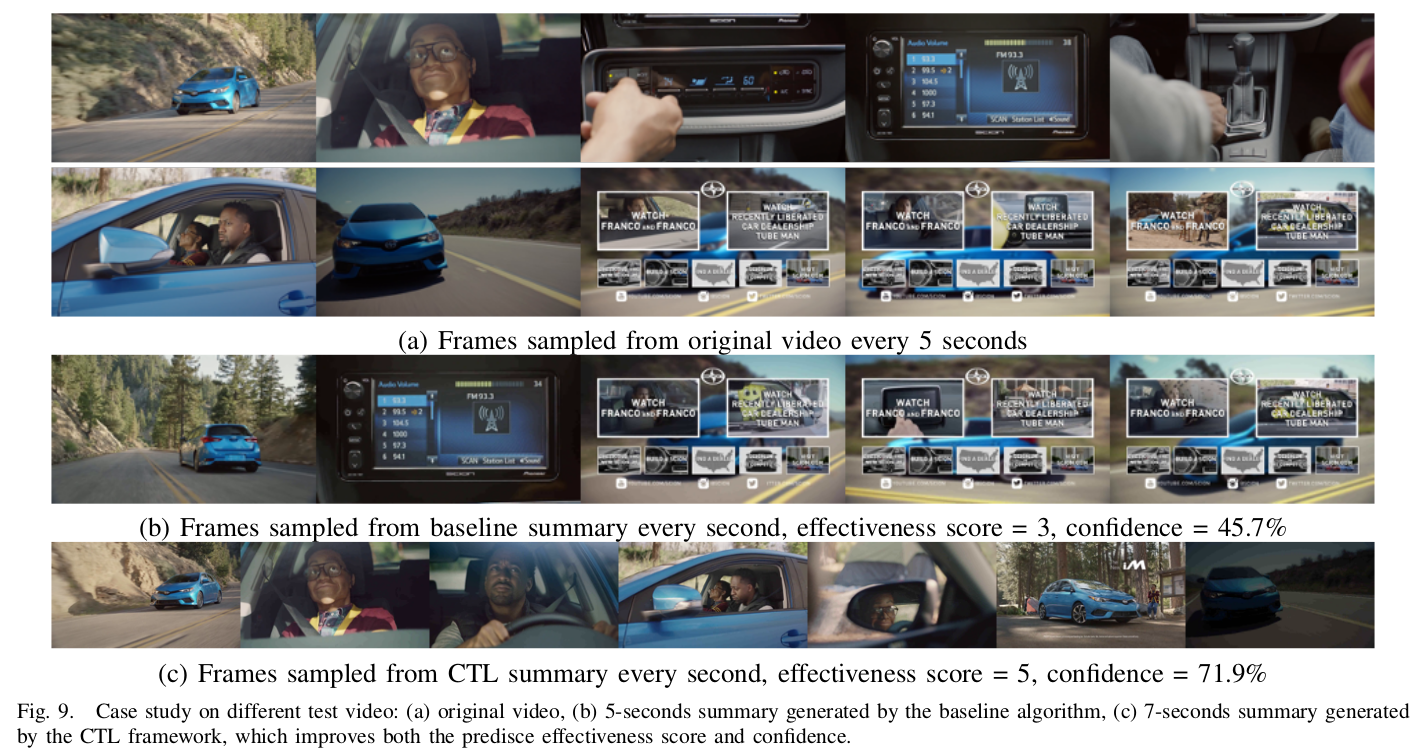

Additionally, we show a second case study, in Figure 9, a 2016 Scion iM TV commercial. Fig. 9(a) describes the full story: Jaleel White drives with "Family Matters" wax museum Steve Urkel in the passenger seat. The dual zone automatic climate control keeps both Jaleel warm and his wax-self from melting. Jaleel finds himself starting to say Urkel's famous line, "Did I do that?" only to catch himself mid-phrase, when he sees Urkel is staring back at him with his iconic smile. As seen from Fig. 9(b), the baseline summarization fails to capture the story. Instead, it chooses three similar frames in the end. In Fig. 9(c), our approach selects not only the right shots but also the appropriate number of shots, which captures the original story well.

Cite

If you find this work useful in your own research, please consider citing:

@inproceedings{xu2020closing,

title={{Closing-the-Loop}: A Data-Driven Framework for Effective Video Summarization},

author={Xu, Ran and Wang, Haoliang and Petrangeli, Stefano and Swaminathan, Viswanathan and Bagchi, Saurabh},

booktitle={Proceedings of the 22nd IEEE International Symposium on Multimedia (ISM)},

pages={201--205},

year={2020}

}

For questions about paper, please contact Ran Xu at martin.xuran@gmail.com